A Commentary on Hoelian Futurism

"Beware the Promethean urge, for it is both our greatest gift and most threatening danger."

My perspective on The Intrinsic Perspective

Every once in a while, you come across a thinker so captivating and refreshing that you end up binge-reading their entire body of work. As of late, I found myself doing exactly this with neuroscientist Erik Hoel, exhaustively consuming his excellent substack, The Intrinsic Perspective, which I highly recommend1.

In addition to being a gifted yet accessible writer (a difficult balance to strike), Hoel’s commentary on science, technology, and futurism has been particularly evocative. More specifically, he has some rather pointed – and I would say, contrarian – opinions regarding the precise manner in which the future should unfold, which has both deeply resonated and sharply conflicted with my own thinking in this domain. Given the pertinence of this topic to much of what we will eventually consider here in Methuselah’s Library, I want to address some of what Hoel has written over the preceding months – giving it the obvious and appropriate moniker, Hoelian futurism – and use it to expand on my own views.

Thus, while I never anticipated myself writing “A Response” to anyone in particular, I feel compelled to do so now, using a number of Hoel’s posts as a lens to bring some of my own hazy ideas into focus. Perhaps it would be more accurate to call this piece a commentary, as this is in no way an attack piece or criticism of Hoel’s work2, but instead an intellectual exercise to further explore a question of the utmost importance: what does a fulfilling and positive future entail and how do we bring it forth?

As avid readers of Methuselah’s Library will have noticed, we are once again taking a break from the Methuselah dividend series. But if you continue reading, you will find that radical life extension will play a crucial role in my argument to answer the aforementioned question. Thus, we will maintain a thin veneer of coherence between our meager (but growing) collection of essays.

Finally, while not entirely necessary (as I will be quoting relevant passages at length), I would suggest checking out several of Hoel’s essays before continuing with this post, a list of which I have provided in the following footnote3. If nothing else, I would encourage you to read “How to prevent the coming inhuman future”, which was the primary inspiration for this writing.

A Hoelian future is a human future

As you may have gathered from reading some of his essays, Hoel’s vision of the future – while permissive of much of what one might call traditional science fiction (i.e., space colonization, life extension, etc.) – is a distinctly restrained and tempered techno-optimism. There is no tolerance for the excesses of transhumanism and its explicit goal of replacing organic intelligence with something supposedly superior…something synthetic. Instead, a Hoelian future gives primacy to biological humans and is willing to sacrifice any technological incursion that threatens this essence. I think this is best illustrated in the following passage from “How to prevent the coming inhuman future”:

There can still be an exciting and dynamic future without taking any of these paths. And there’s still room for the many people interested in longtermism, and contributing to the future, to make serious, calculable, and realistic contributions to the welfare of that future. Humans might live on other planets, develop technology that seems magical by today’s standards, colonize the galaxy, explore novel political arrangements and ways of living, live to be healthy into our hundreds, and all this would not change the fundamental nature of humans in the manner the other paths would (consider that in A Midsummer Night’s Dream, many of the characters are immortal). Such future humans, even if radically different in their lives than us, even if considered “transhuman” by our standards (like having eliminated disease or death), could likely still find relevancy in Shakespeare.

This commitment to preserving not just a semblance, but the majority, of what makes us biological humans – messy, fleshy beings with both prefrontal cortexes and limbic systems – as we wade into the future is what sets Hoelian futurism apart. Hoel is not shy about this core aspect of his outlook either. If there was any doubt about where humans belong in his worldview, let it be dispelled by the conclusion of the above quoted essay:

Humans forever! Humans forever! Humans forever!

While I personally find this sort of jubilation excessive, I greatly admire Hoel for so emphatically staking his position – a position I find intellectually courageous given how out of step anthropocentric futurism has become in our current era.

The Kurzweilian Consortium

It almost goes without saying that Hoelian futurism as I have characterized it is an unpopular position amongst the vanguard of modern futurists. Indeed, in some quarters, Hoel’s vision of the future would be described as some combination of backwards bio-chauvinism and downright Luddism. But before delving into why such insults are undeserved, I will try to more fully define those who would oppose a Hoelian future. Enter the Kurzweilian Consortium4.

If you have dabbled in futurism at any point in the last thirty years, you have probably come across the work of Ray Kurzweil5. In my opinion, the man has probably influenced the conversation around the trajectory of technology more than anyone else over the past few decades, most notably through his formulation of the Technological Singularity. If you have never heard of him, consider this tweet as a first impression:

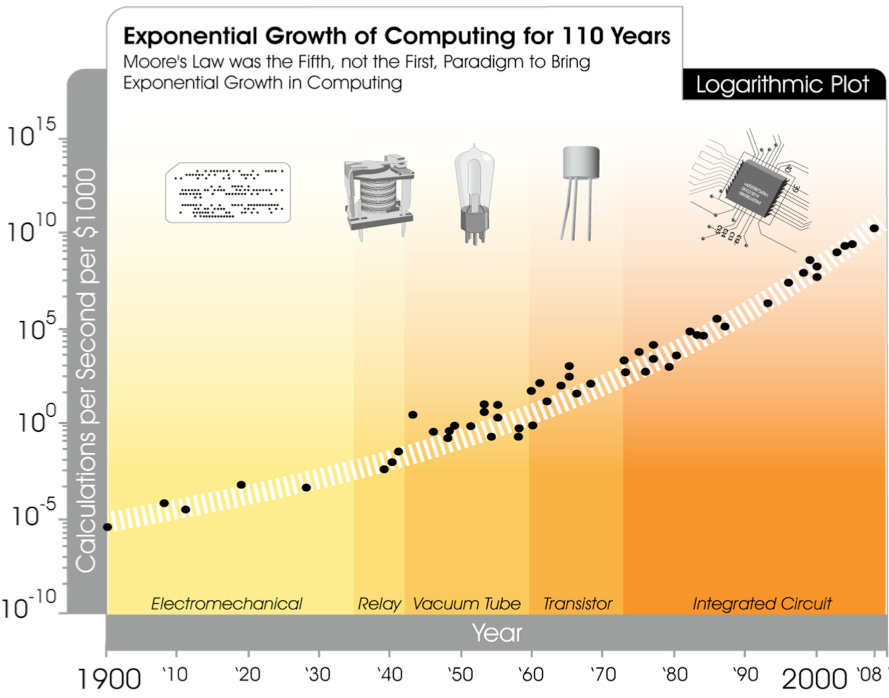

Jokes aside…if you are a reader of Methuselah’s Library, you probably are already familiar with the Singularity – that somewhat nebulous point in time when technology will accelerate so quickly that we cannot even begin to comprehend its nature or implications. Usually, including in Kurzweil’s conception of the Singularity, this involves the advent of some kind of artificial superintelligence that continuously increases its cognitive capabilities, resulting in a runaway intelligence explosion6. The central piece of evidence underpinning this thesis is the long and impressively constant superexponential growth in computation over the past century:

Extrapolate this graph further, and at some point in the next few decades (Kurzweil very precisely predicts 2045), we will possess the computational power to create god-like artificial general intelligence (AGI), at which time the Singularity will be upon us. And here we see the manner in which Kurzweilian futurism most glaringly comes into conflict with Hoelian futurism: the centrality of AGI in bringing about the Singularity. In stark contrast to those who expect and welcome the invention of AGI, Hoel has made clear his thoughts on the matter. I will quote a passage from “We need a Butlerian Jihad against AI”:

An actual progressive research program toward strong AI is immoral. You’re basically iteratively creating monsters until you get it right. Whenever you get it wrong, you have to kill one of them, or tweak their brain enough that it’s as if you killed them.

Far more important than the process: strong AI is immoral in and of itself. For example, if you have strong AI, what are you going to do with it besides effectively have robotic slaves? And even if, by some miracle, you create strong AI in a mostly ethical way, and you also deploy it in a mostly ethical way, strong AI is immoral just in its existence. I mean that it is an abomination. It’s not an evolved being. It’s not a mammal. It doesn’t share our neurological structure, our history, it lacks any homology. It will have no parents, it will not be born of love, in the way we are, and carried for months and given mother’s milk and made faces at and swaddled and rocked.

And some things are abominations, by the way. That’s a legitimate and utterly necessary category. It’s not just religious language, nor is it alarmism or fundamentalism. The international community agrees that human/animal hybrids are abominations—we shouldn’t make them to preserve the dignity of the human, despite their creation being well within our scientific capability. Those who actually want to stop AI research should adopt the same stance toward strong AI as the international community holds toward human/animal hybrids. They should argue that it debases the human. Just by its mere existence, it debases us. When AIs can write poetry, essays, and articles better than humans, how do they not create a semantic apocalypse? Do we really want a “human-made” sticker at the beginning of film credits? At the front of a novel? In the words of Bartleby the Scrivener: “I would prefer not to.”

Since we currently lack a scientific theory of consciousness, we have no idea if strong AI is experiencing or not—so why not treat consciousness as sacred as we treat the human body, i.e., not as a thing to fiddle around with randomly in a lab? And again, I’m not talking about self-driving cars here. Even the best current AIs, like GPT-3, are not in the Strong category yet, although they may be getting close. When a researcher or company goes to make an AI, they should have to show that it can’t do certain things, that it can’t pass certain general tests, that it is specialized in some fundamental way, and absolutely not conscious in the way humans are. We are still in control here, and while we are, the “AI safety” cohort should decide whether they actually want to get serious and ban this research, or if all they actually wanted to do was geek out about its wild possibilities. Because if we're going to ban it, we need more than just a warning about an existential risk of a debatable property (superintelligence) that has a downside of unknown probability.

Tell us how you really feel, Erik. This repudiation against the development of AGI is remarkable in that it stems from a place of upholding the importance of biological human dignity, and not simply the cold calculations of every Effective Altruist’s favorite category of existential risk7:

While I think serious attempts to quantify existential risk are important, we should recognize that Hoel’s grievances against AGI are unique from the sort of utilitarian approach so often employed by the Rationalist community. That is, Hoel approaches the problem of machine intelligence like an actual human being instead of…well, a machine.

And while I do think Hoel is directionally correct in opposing the rapid development of superintelligence, which certainly threatens the preservation of a human future, the draconian nature of his remedy – a complete moratorium, no…a jihad – is where I start to break with Hoel8. Perhaps a libertarian bias clouds my judgment, but I do not think a total ban on AI research would be feasible or enforceable in reality. It also replaces one form of extremism with another, which does not seem to be the appropriate prescription to the problem9.

That said, despite not fully agreeing with the degree to which Hoel objects to AGI, I am encouraged that there are voices countering the Kurzweilian program, which seems more concerned with accelerating the creation of AGI than contemplating its risks. Thus, if we lived in a hypothetical world where only the binary choice between Kurzweilian accelerationism and a Hoelian moratorium was all that existed, I would choose the latter.

Having discussed how the Kurzweilian and Hoelian perspectives are nearly diametrically opposed with respect to AI, we will now consider other important differences. While an AGI-induced Singularity is the core element of Kurzweilian futurism, there are other aspects of this outlook that clash with Hoel’s central principle of preserving the dignity of biological humans.

Transcending biology = inhuman future?

We must look no further than the subtitle of Kurweil’s magnum opus, The Singularity is Near, to understand the litany of ways in which a Kurzweilian future would usher in the inhuman future that Hoel warns against. The tagline, “When Humans Transcend Biology”, of the tome makes explicit what is under contention.

Importantly, it is not the advent of superintelligence alone that risks debasing a human-dominated future. You see, there many ways to forsake our humanity if you seek to shed these pesky meat-suits we call bodies and accelerate towards the Singularity. The crucial question here is as follows: to what extent does transcending biology risk forsaking our humanity?

Now, any prudent commentator of the future should answer this question with humility and admit that it is a very open question. We simply do not know what consequences – be they positive or negative – radical alterations or outright replacements to our biology will have. However, we can speculate about the collection of technologies that would enable humanity to transcend biology. In addition to the already discussed creation of superintelligence, I have named four additional technological programs – all central aims of Kurzweilian futurism – that potentially threaten our humanity, which roughly mirrors what Hoel has expressed concern about previously:

Creating extremely immersive virtual realities.

Enhancing our minds and bodies via advanced biotechnology.

Integrating collectively with brain computer interfaces.

Uploading or emulating our neural processes in inorganic substrates.

To be clear, I am not asserting that any of these projects will necessarily lead to catastrophe, but the major issue with the Kurzweilian Consortium is an unwillingness to take seriously the possibility that these technologies may lead to an undesirable outcome for humanity. There are many ways in which the future can unravel if we are not careful. In short, this is why I find Hoelian futurism so refreshing; humans, and the desire to preserve that which we already know makes a human life worth living, are the indispensable ingredients that the future must contain. A Kurzweilian future provides no such assurances, and if you squint, you may glimpse that it guarantees the opposite.

To be clear, it is not my intention to brand any particular group as nefarious, including those who ascribe to Kurzweilian ideas. Indeed, there was a time in my youth I would have counted myself as a transhumanist singularitarian. And I still sympathize with the enthusiasm such people have for a potentially wondrous future that the aforementioned technologies could unlock. However, it is my suspicion that the future can go wrong in far more ways than it can go right…and if the world adopts a Kurzweilian rather than a Hoelian perspective, the likelihood of failing the future is far higher.

This leaves us with the obvious next question: if we are not to accelerate into a bewildering and dangerous Kurzweilian future, then how should we proceed?

Temporary technological incrementalism and a path forward

At this point, you would be forgiven for accusing me (to say nothing of Hoel) of being a raving Luddite, so let me attempt to dispel you of that notion with some qualifications and by proposing a path forward. Part of the irony in writing this essay is that, along many axes, I actually think technological progress is slowing down, which puts me, along with Hoel, firmly in the Stagnationist camp – a perspective I have touched on previously. Being someone who is broadly excited about the future and loves technology, I would be thrilled to see at least some of these trends reverse. Regardless, it is possible…perhaps even likely, that technological progress becomes more incremental – a trajectory that Hoel appears to endorse:

If you want to predict the future accurately, you should be an incrementalist and accept that human nature doesn’t change along most axes. Meaning that the future will look a lot like the past. If Cicero were transported from ancient Rome to our time he would easily understand most things about our society. There’d be a short-term amazement at various new technologies and societal changes, but soon Cicero would settle in and be throwing out Trump/Sulla comparisons (or contradicting them), since many of the debates we face, like what to do about growing wealth inequality, or how to keep a democracy functional, are the same as in Roman times.

To see what I mean more specifically: 2050, that super futuristic year, is only 29 years out, so it is exactly the same as predicting what the world would look like today back in 1992. How would one proceed in such a prediction? Many of the most famous futurists would proceed by imagining a sci-fi technology that doesn’t exist (like brain uploading, magnetic floating cars, etc), with the assumption that these nonexistent technologies will be the most impactful. Yet what was most impactful from 1992 were technologies or trends already in their nascent phases, and it was simply a matter of choosing what to extrapolate.

In a sense, this is a hopeful trend with respect to managing the inhuman future that Hoel portends, as it may give us more time to acclimate to whatever disruptive technologies are introduced. However, I do not think we should rely on this being the most likely outcome given the complacency it will breed in the face of possible catastrophe.

This concern is bolstered by the fact that most of the technologies Hoel worries about are undergirded by progress in information technology, which is the one technological domain that does not appear to be facing headwinds – Thiel’s atoms verses bits thesis. Furthermore, technological trends are subject to change, and it is certainly possible that an unexpected discontinuity could interrupt a regime of apparent technological incrementalism.

In any case, I cannot in good conscience support a technological program that would bring about the implicated technologies in a compressed period of time, such as two or three decades, which is what Kurzweil hopes for and predicts. Instead, I would propose a policy of technological incrementalism be applied to those those technologies that threaten our humanity10. I suppose this makes me a kind of selective Luddite, but given the conundrum we find ourselves in, it is a label I will reluctantly accept.

That said, most of my misgivings regarding Kurzweilian futurism and my current preference for a more Hoelian outlook boils down to a matter of approach and timing. I do not, in fact, think that the ultimate aims of the Kurzweilian Consortium, even AGI, are off the table into perpetuity, which is where I might part ways with Hoel.

As alluded to above, the primary flaw with Kurzweil’s ideas, and perhaps Singularitarianism more specifically, is the rapid recklessness with which technology is pursued. Technological acceleration is treated as an immutable destiny, which leaves no room for the cautious contemplation that the future deserves. Alternatively, if the technological programs that risk violating our humanity are approached with the time, preparation, and wisdom they require to get right, then a timeline that both contains Kurzweilian wonders and preserves the essence of what makes humans human may be possible.

Radical life extension as the portal to an optimal future

While not immediately obvious, I believe radical life extension may be the key to unlocking such a future. Furthermore, I think ending aging should be our first priority as a civilization, partially due to the advantages it would grant in facing a future bent on diluting our humanity. Let me explain why.

First, the pursuit of radical life extension is congruous with both the Kurzweilian and Hoelian perspectives. Kurzweil has long talked about how longevity science will serve as a bridge to more radical forms of digital immortality, and Hoel includes the defeat of aging in his conception of an acceptable future:

Such future humans, even if radically different in their lives than us, even if considered “transhuman” by our standards (like having eliminated disease or death), could likely still find relevancy in Shakespeare.

More importantly though, serious progress in the battle against aging might serve to dampen the frenetic pace at which the Kurzweilian Consortium seeks to achieve its aims. I suspect that part of the motivation underlying the race to develop AGI is the belief that such technology is required to defeat death – or at least required to do so within the lifetime of the individual nerd in question11. The stakes are lowered once longevity escape velocity seems like a realistic possibility. Who cares if the Singularity is delayed another thousand years?…you are already endowed with a practically indefinite lifespan. As we have discussed previously, radical life extension has the inherent byproduct of lowering time preference and incentivizing a long term mindset.

In addition to tempering the ambitions of the Kurzweilian Consortium, radical life extension might also convince someone of a Hoelian bent that a future populated with genetically enhanced individuals harmoniously interfacing with superintelligent synthetic minds might not be completely devoid of humanity. While we have already begun to explore how radical life extension will enable the acquisition of knowledge to a deeper and wider extent, it will also allow us to better decide what to do with that knowledge. Wisdom cannot be bought; it is earned through experience that is paid in years lived.

Thus, while I would not trust our current civilization with the task of, for example, ethically and safely bringing AGI into the world, I would expect a society populated by multicentenarian sages to have a far higher chance of success. Or has Hoel (when writing about something entirely unrelated) poetically put it:

Right now we are children in a dark room, waiting for the hallway light to turn on and an adult to come save us.

For these reasons, I see radical life extension as a portal to an optimal future and the single technological program that can perhaps bridge the Kurzweilian and Hoelian perspectives.

Conclusions and future directions

Unfortunately, there are issues with my solution to the aforementioned Kurzweilian dangers. Not least of which is the following: at the current rate, anti-aging technologies appear to be progressing at a slower clip relative to at least some of the aforementioned more risky technologies. Even in an environment where all technological progress is less rapid than in the past, this is still a problem. Thus, until this is no longer the case, I will consider myself more of a Hoelian than a Kurzweilian. Promethean fire can either light and warm your town or set ablaze everything you hold dear…and at the moment we are risking a conflagration.

On that rather uplifting note, I will conclude with the observation that the ideas discussed herein are underdeveloped. I hope to formalize them in the future into a more coherent outlook – my own breed of futurism that can stand alongside the Kurzweilian and Hoelian perspectives12.

In the meanwhile, we will return to the Methuselah Dividend series where we will explore the “accretion of wisdom”, a concept briefly invoked above that deserves much more attention. As always, if you find this content worthy, please subscribe below and spread the good word:

Erik had also written a novel, The Revelations, and is an accomplished academic in the field of neuroscience. Indeed, Hoel worked with Giuli Tononi on integrated information theory – something even someone such as myself, who knows little about the science of consciousness, has heard about.

If it was, that would be rather ill-advised given that Hoel is far smarter than me.

You should devour all of Hoel’s content, but these are the most pertinent to this essay: We need a Butlerian Jihad against AI, Futurists have their heads in the clouds, How to prevent the coming inhuman future, How AI's critics end up serving the AIs.

While I chose the word “consortium” mostly for alliterative purposes, it works quite well in the literal sense of the term – there actually are a group of loosely affiliated firms and organizations driving towards making a Kurzweilian future a reality. Alphabet/DeepMind, Microsoft/OpenAI, Meta, Tesla, and countless other companies and academic institutions are collectively investing billions to this end, and have a particular interest in AI research and development.

Coincidentally, Kurzweil, after being somewhat inconspicuous in recent years, stepped back into the limelight by appearing on Lex Fridman. Hoel took note of Kurzweil’s return as well, mentioning that the podcast was interesting. To be honest, while Kurzweil comes across slightly more charismatic than usual, he essentially repeats everything he has been saying for the past twenty years – something I have criticized in the past (perhaps too harshly). That said, he plans to release an update to his thesis, The Singularity is Nearer, next year, which I will almost certainly read.

The British mathematician, I. J. Good, was the first to conceive of this notion even before the word “singularity” had been applied to the concept.

Speaking of Effective Altruism (EA), Hoel has quite a bit to say on the topic. Hoel’s criticism is timely given the recent disgrace of Sam Bankman-Fried, who was EA’s greatest benefactor. And while EA as a whole has done a lot of good (for we should not judge an entire philosophy by one man’s misdeeds), the sort of pure utilitarianism it employs is not for me.

I fully admit I am not proposing any alternative policy to deal with the very real risks of AI research – another essay for another day.

My preference would be for this to be instituted by a broad, decentralized cultural shift in how industries develop technology. However, I think the chances of events playing out in this manner are vanishingly small.

I would not be surprised if Demis Hassabis, founder of DeepMind, believes this. He says as much in the announcement of Isomorphic Labs, the Alphabet subsidiary that will apply AI to biomedical research and drug discovery. Here is the relevant quote:

Biology is likely far too complex and messy to ever be encapsulated as a simple set of neat mathematical equations. But just as mathematics turned out to be the right description language for physics, biology may turn out to be the perfect type of regime for the application of AI.

Fëanorian futurism has a certain ring to it, but perhaps at that point it would be prudent to drop the pseudonym, lest there be confusion.